In the midst of this incredible technological shift, two things are clear: organizations are seeing tangible results from AI and the innovation potential is limitless. We aim to empower YOU—whether as a developer, IT pro, AI engineer, business decision maker, or a data professional—to harness the full potential of AI to advance your business priorities. Microsoft’s enterprise experience, robust capabilities, and firm commitments to trustworthy technology all come together in Azure to help you find success with your AI ambitions as you create the future.

This week we’re announcing news and advancements to showcase our commitment to your success in this dynamic era. Let’s get started.

Introducing Microsoft Azure AI Foundry: A unified platform to design, customize, and manage AI solutions

Every new generation of applications brings with it a changing set of needs, and just as web, mobile, and cloud technologies have driven the rise of new application platforms, AI is changing how we build, run, govern, and optimize applications. According to a Deloitte report, nearly 70% of organizations have moved 30% or fewer of their Generative AI experiments into production—so there is a lot of innovation and results ready to be unlocked. Business leaders are looking to reduce the time and cost of bringing their AI solutions to market while continuing to monitor, measure, and evaluate their performance and ROI.

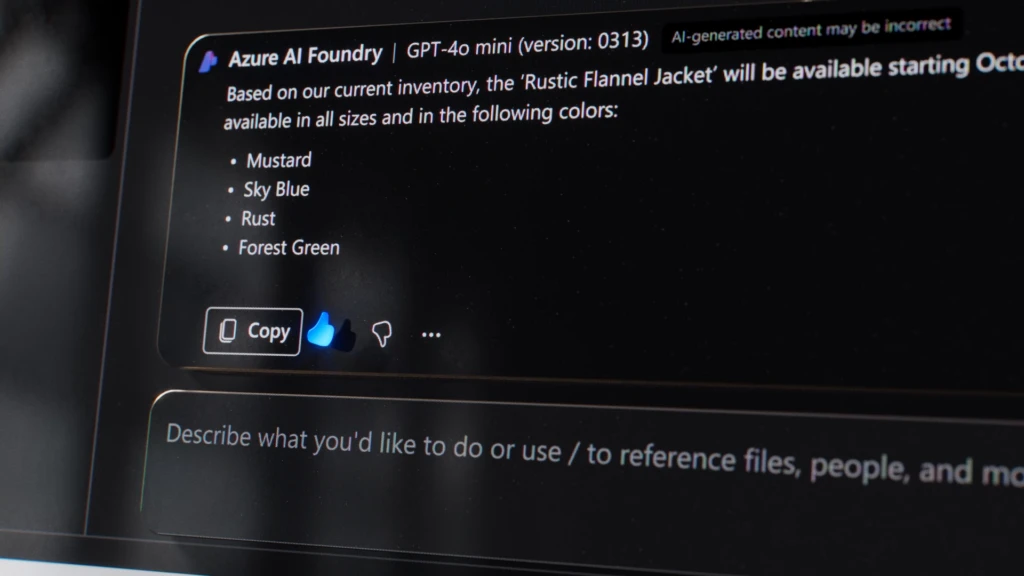

This is why we’re excited to unveil Azure AI Foundry today as a unified application platform for your entire organization in the age of AI. Azure AI Foundry helps bridge the gap between cutting-edge AI technologies and practical business applications, empowering organizations to harness the full potential of AI efficiently and effectively.

We’re unifying the AI toolchain in a new Azure AI Foundry SDK that makes Azure AI capabilities accessible from familiar tools, like GitHub, Visual Studio, and Copilot Studio. We’ll also evolve Azure AI Studio into an enterprise-grade management console and portal for Azure AI Foundry.

Azure AI Foundry is designed to empower your entire organization—developers, AI engineers, and IT professionals—to customize, host, run, and manage AI solutions with greater ease and confidence. This unified approach simplifies the development and management process, helping all stakeholders focus on driving innovation and achieving strategic goals.

For developers, Azure AI Foundry delivers a streamlined process to swiftly adapt the latest AI advancements and focus on delivering impactful applications. Developers will also find an enhanced experience, with access to all existing Azure AI Services, and tooling along with new capabilities we’re announcing today.

For IT professionals and business leaders, adopting AI technologies raises important questions about measurability, ROI, and ongoing optimization. There’s a pressing need for tools that provide clear insights into AI initiatives and their impact on the business. Azure AI Foundry enables leaders to measure their effectiveness, align them with organizational goals, and more confidently invest in AI technologies.

To help you scale AI adoption in your organization, we’re introducing comprehensive guidance for AI adoption and architecture within Azure Essentials so you are equipped to successfully navigate the pace of AI innovation. Azure Essentials gives you access to Microsoft’s best practices, product experiences, reference architectures, skilling, and resources into a single destination. It’s a great way to benefit from all we’ve learned and the approach you’ll find aligns directly with how to make the most of Azure AI Foundry.

In a market flooded with disparate technologies and choices, we created Azure AI Foundry to thoughtfully address diverse needs across an organization in the pursuit of AI transformation. It’s not just about providing advanced tools, though we have those, too. It’s about fostering collaboration and alignment between technical teams and business strategy.

Now, let’s dive into additional updates designed to enhance the overall experience and efficiency throughout the AI development process, no matter your role.

Introducing Azure AI Agent Service to automate business processes and help you focus on your most strategic work

AI agents have huge potential to autonomously perform routine tasks, boosting productivity and efficiency, all while keeping you at the center. We’re introducing Azure AI Agent Service to help developers orchestrate, deploy, and scale enterprise AI-powered apps to automate business processes. These intelligent agents handle tasks independently, involving human users for final review or action, ensuring your team can focus on your most strategic initiatives.

A standout feature of Agent Service is the ability to easily connect enterprise data for grounding, including Microsoft SharePoint and Microsoft Fabric, and tools integration to automate actions. With features like bring your own storage (BYOS) and private networking, it ensures data privacy and compliance, helping organizations protect their sensitive data. This allows your business to leverage existing data and systems to create powerful and secure agentic workflows.

Enhanced observability and collaboration with a new management center experience

To support the development and governance of generative AI apps and fine-tuned models, today we’re unveiling a new management center experience right in Azure AI Foundry portal. This feature brings essential subscription information, such as connected resources, access privileges, and quota usage, into one pane of glass. This can save development teams valuable time and facilitate easier security and compliance workflows throughout the entire AI lifecycle.

Expanding our AI model catalog with more specialized solutions and customization options

From generating realistic images to crafting human-like text, AI models have immense potential, but to truly harness their power, you need customized solutions. Our AI model catalog is designed to provide choice and flexibility and ensure your organization and developers have what they need to explore what AI models can do to advance your business priorities. Along with the latest from OpenAI and Microsoft’s Phi family of small language models, our model catalog includes open and frontier models. We offer more than 1,800 options and we’re expanding to offer even more tailored and specialized task and industry-specific models.

We’re announcing additions that include models from Bria, now in preview, and NTT DATA, now generally available. Industry-specific models from Bayer, Sight Machine, Rockwell Automation, Saifr/Fidelity Labs, and Paige.ai are also available today in preview for specialized solutions in healthcare, manufacturing, finance, and more.

We’ve seen Azure OpenAI Service consumption more than double over the past six months, making it clear customers are excited about this partnership and what it offers2. We look forward to bringing more innovation to you with our partners at OpenAI, starting with new fine-tuning capabilities like vision fine-tuning and distillation workflows which allow a smaller model like GPT-4o mini to replicate the behavior of a larger model such as GPT-4o with fine-tuning, capturing its essential knowledge and bringing new efficiencies.

Along with unparalleled model choice, we equip you with essential tools like benchmarking, evaluation, and a unified model inference API so you can explore, compare, and select the best model for your needs without changing a line of code. This means you can easily swap out models without the need to recode as new advancements emerge, ensuring you’re never locked into a single model.

New collaborations to streamline model customization process for more tailored AI solutions

We’re announcing collaborations with Weights & Biases, Gretel, Scale AI, and Statsig to accelerate end-to-end AI model customization. These collaborations cover everything from data preparation and generation to training, evaluation, and experimentation with fine-tuned models.

The integration of Weights & Biases with Azure will provide a comprehensive suite of tools for tracking, evaluating, and optimizing a wide range of models in Azure OpenAI Service, including GPT-4, GPT-4o, and GPT-4o-mini. This ensures organizations can build AI applications that are not only powerful, but also specifically tailored to their business needs.

The collaborations with Gretel and Scale AI aim to help developers remove data bottlenecks and make data AI-ready for training. With Gretel Azure OpenAI Service integration, you can upload Gretel generated data to Azure OpenAI Service to fine-tune AI models and achieve better performance in domain-specific use cases. Our Scale AI partnership will also help developers with expert feedback, data preparation, and support for fine-tuning and training models.

The Statsig collaboration enables you to dynamically configure AI applications and run powerful experiments to optimize your models and applications in production.

More RAG performance with Azure AI Search

Retrieval-augmented generation, or RAG, is important for ensuring accurate, contextual responses and reliable information. Azure AI Search now features a generative query engine built for high performance (for select regions). Query rewriting, available in preview, transforms and creates multiple variations of a query using an SLM-trained (Small Language Model) on data typically seen in generative AI applications. In addition, semantic ranker has a new reranking model, trained with insights gathered from customer feedback and industry market trends from over a year.

With these improvements, we’ve shattered our own performance records—our new query engine delivers up to 12.5% better relevance, and is up to 2.3 times faster than last year’s stack. Customers can already take advantage of better RAG performance today, without having to configure or customize any settings. That means improved RAG performance is delivered out of the box, with all the hard work done for you.

Effortless RAG with GitHub models and Azure AI Search—just add data

Azure AI Search will soon power RAG in GitHub Models, offering you the same easy access glide path to bring RAG to your developer environment in GitHub Codespaces. In just a few clicks, you can experiment with RAG and your data. Directly from the playground, simply upload your data (just drag and drop), and a free Azure AI Search index will automatically be provisioned.

Once you’re ready to build, copy/paste a code snippet into your dev environment to add more data or try out more advanced retrieval methods offered by Azure AI Search.

This means you can unlock a full-featured knowledge retrieval system for free, without ever leaving your code. Just add data.

Advanced vector search and RAG capabilities now integrated into Azure Databases

Vector search and RAG are transforming AI application development by enabling more intelligent, context-aware systems. Azure Databases now integrates innovations from Microsoft Research—DiskANN and GraphRAG—to provide cost-effective, scalable solutions for these technologies.

GraphRAG, available in preview in Azure Database for PostgreSQL, offers advanced RAG capabilities, enhancing large language models (LLMs) with your private PostgreSQL datasets. These integrations help empower developers, IT pros, and AI engineers alike, to build the next generation of AI applications efficiently and at cloud scale.

DiskANN, a state-of-the-art suite of algorithms for low-latency, highly scalable vector search, is now generally available in Azure Cosmos DB and in preview for Azure Database for PostgreSQL. It’s also combined with full-text search to power Azure Cosmos DB hybrid search, currently in preview.

Equipping you with responsible AI tooling to help ensure safety and compliance

We continue to back up our Trustworthy AI commitments with tools you can use, and today we’re announcing two more: AI reports and risk and safety evaluations for images. These updates help ensure your AI applications are not only innovative, but safe and compliant. AI reports enable developers to document and share the use case, model card, and evaluation results for fine-tuned models and generative AI applications. Compliance teams can easily review, export, approve, and audit these reports across their organization, streamlining AI asset tracking, and governance.

We are also excited to announce new collaborations with Credo AI and Saidot to support customers’ end-to-end AI governance. Credo AI pioneered a responsible AI platform enabling comprehensive AI governance, oversight, and accountability. Saidot’s AI Governance Platform helps enterprises and governments manage risk and compliance of their AI-powered systems with efficiency and high quality. By integrating the best of Azure AI with innovative AI governance solutions, we hope to provide our customers with choice and foster greater cross-functional collaboration to align AI solutions with their own principles and regulatory requirements.

Transform unstructured data into multimodal app experiences with Azure AI Content Understanding

AI capabilities are quickly advancing and expanding beyond traditional text to better reflect content and input that matches our real world. We’re introducing Azure AI Content Understanding to make it faster, easier, and more cost-effective to build multimodal applications with text, audio, images, and video. Now in preview, this service uses generative AI to extract information into customizable structured outputs.

Pre-built templates offer a streamlined workflow and opportunities to customize outputs for a wide range of use-cases—call center analytics, marketing automation, content search, and more. And, by processing data from multiple modalities at the same time, this service can help developers reduce the complexities of building AI applications while keeping security and accuracy at the center.

Advancing the developer experience with new AI capabilities and a personal guide to Azure

As a company of developers, we always keep the developer community top of mind with every advancement we bring to Azure. We strive to offer you the latest tech and best practices that boost impact, fit the way you work, and improve the development experience as you build AI apps.

We’re introducing two offerings in Azure Container Apps to help transform how AI app developers work: serverless GPUs, now in preview, and dynamic sessions, available now.

With Azure Container Apps serverless GPUs—you can seamlessly run your customer AI models on NVIDIA GPUs. This feature provides serverless scaling with optimized cold start, per-second billing, with built-in scale down to zero when not in use, and reduced operational overhead. It supports easy real-time inferencing for custom AI models, allowing you to focus on your core AI code without worrying about managing GPU infrastructure.

Azure Container Apps dynamic sessions—offer fast access to secure sandboxed environments. These sessions are perfect for running code that requires strong isolation, such as large language model (LLM) generated code or extending and customizing software as a service (SaaS) apps. You can mitigate risks, leverage serverless scale, and reduce operational overhead in a cost-efficient manner. Dynamic sessions come with a Python code interpreter pre-installed with popular libraries, making it easy to execute common code scenarios without managing infrastructure or containers.

These new offerings are part of our ongoing work to put Azure’s comprehensive dev capabilities within easy reach. They come right on the heels of announcing the preview of GitHub Copilot for Azure, which is like having a personal guide to Azure. By integrating with tools you already use, GitHub Copilot for Azure enhances Copilot Chat capabilities to help manage resources and deploy applications and the “@azure” command provides personalized guidance without ever leaving the code.

Updates to our intelligent data platform and Microsoft Fabric help propel AI innovation through your unique data

While AI capabilities are remarkable, even the most powerful models don’t know your specific business. Unlocking AI’s full value requires integrating your organization’s unique data—a modern, fully integrated data estate forms the bedrock of innovation. Fast and reliable access to high-quality data becomes critical as AI applications handle increasing volumes of data requests. This is why we believe in the power of our Intelligent Data Platform as an ideal data and AI foundation for every organization’s success, today and tomorrow.

To help meet the need for high-quality data in AI applications, we’re pleased to announce that Azure Managed Redis is now in preview. In-memory caching helps boost app performance by reducing latency and offloading traffic from databases. This new service offers up to 99.999% availability3 and comprehensive support—all while being more cost-effective than the current offering. The best part? Azure Managed Redis goes beyond standard caching to optimize AI app performance and works with Azure services. The latest Redis innovations, including advanced search capabilities and support for a variety of data types, are accessible across all service tiers4.

Just about a year ago we introduced Microsoft Fabric as our end-to-end data analytics platform that brought together all the data and analytics tools that organizations needed to empower data and business professionals alike to unlock the potential of their data and lay the foundation for the era of AI. Be sure to check out Arun Ulag’s blog today to learn all about the new Fabric features and integrations we’re announcing this week to help prepare your organization for the era of AI with a single, AI-powered data platform—including the introduction of Fabric Databases.

How will you create the future?

As AI transforms industries and unveils new opportunities, we’re committed to providing practical solutions and powerful innovation to empower you to thrive in this evolving landscape. Everything we’re delivering today reflects our dedication to meeting the real-world needs of both developers and business leaders, ensuring every person and every organization can harness the transformative power of AI.

With these tools at your disposal, I’m excited to see how you’ll shape the future. Have a great Ignite week!

Make the most of Ignite 2024

- Read our Ignite announcements on how to scale your AI transformation with a powerful, secure, and adaptive cloud infrastructure.

- Tune in for can’t-miss sessions at Ignite 2024:

- Microsoft Ignite Keynote with Satya Nadella and Microsoft leaders.

- Empowering your AI Ambitions with Azure.

- Azure AI unlocking the AI revolution.

- Microsoft Fabric What’s new and what’s next.

- Trustworthy AI Future trends and best practices.

- Do a deep dive on all the product innovation rolling out this week over on Tech Community.

- Check out the Master GenAI Model Selection, Evaluation, and Multimodal Integration with Azure AI Foundry Plan on Microsoft Learn. With this outcome-based skilling plan, you can explore the process of selecting and applying GenAI models, how to benchmark and apply multimodal models, and complete evaluations to ensure performance and safety.

- Find out how we’re making it easy to discover, buy, deploy, and manage cloud and AI solutions via the Microsoft commercial marketplace, and get connected to vetted partner solutions today.

- We’re here to help. Check out Azure Essentials guidance for a comprehensive framework to navigate this complex landscape, and ensure your AI initiatives not only succeed but become catalysts for innovation and growth.

References

1. Four futures of generative AI in the enterprise: Scenario planning for strategic resilience and adaptability.

2. Microsoft Fiscal Year 2025 First Quarter Earnings Conference Call.

2. Up to 99.999% uptime SLA is planned for the General Availability of Azure Managed Redis.

3. B0, B1 SKU options, and Flash Optimized tier, may not have access to all features and capabilities.